Hello!

I’m trying to set up an automation with Incoming Webhooks. The source of data is Active Campaign automation that sends a contact information to my webhook endpoint.

The issue is, if some information is missing in the contact record, ActiveCampaign does not send that property at all in the JSON response.

{

body: {

linkedin: undefined

}

}

vs.

{

body: {}

}

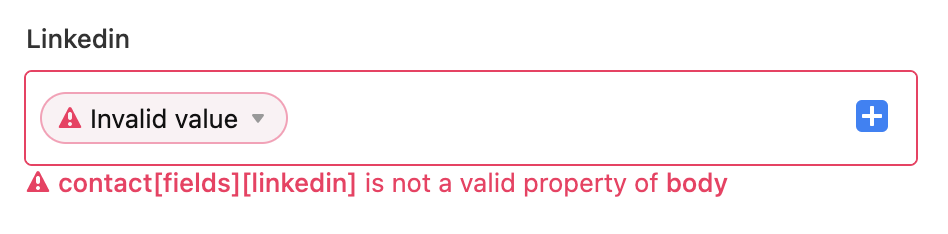

In case of ActiveCampaign, the second response is being sent instead of the first one. Which then makes Airtable tell me there is a “configuration error”:

Should I just ignore this error? Or if some property is missing in the JSON input it will make the automation error out?

Is there any workaround here?