If a record is updated it would be awesome to sent the data to a webhook url. How would you do that?

- Home

- Community

- Ask the Community

- Automations

- How to send data to a webhook url?

How to send data to a webhook url?

- November 6, 2022

- 17 replies

- 3969 views

17 replies

- Genius

- November 6, 2022

2023 Update:

This thread explains the solution in detail, using Make as the example app for receiving the webhook.

There can be a bit of a learning curve with Make, which is why I created this basic navigation video for Make, along with providing the link to Make’s free training courses. There are also many Make experts hanging out there who can answer other Make questions & webhook questions.

————————-

OLD ANSWER FROM 2022:

If you want it to happen automatically, you would need to setup an automation that runs a script. Check out the thread here:

Your Expense Record field is an array (not a string) because it is a linked record field. The easiest & best thing to do would probably be to just send the RecordID on its own to Make’s webhook, and then use the Airtable - Get A Record module to pull in all the rest of the values from your record, including any array fields like your linked record field. This is how you would send just the Record ID to a webhook in Make: let config = input.config(); let url = `https://hook.us1.make.com/xxxxxx…

If you need to send multiple pieces of data to your webhook, that thread shows you how to write the script for that.

However, as also mentioned in that thread, if you’re using an integration tool like Make’s webhooks, the simplest & easiest & best thing to do would be to just send the RecordID on its own to Make’s webhook, and then use the Airtable - Get A Record module to pull in all the rest of the field values from your record, including any linked record fields, attachment fields, etc. Then you would have full access to all of your field values.

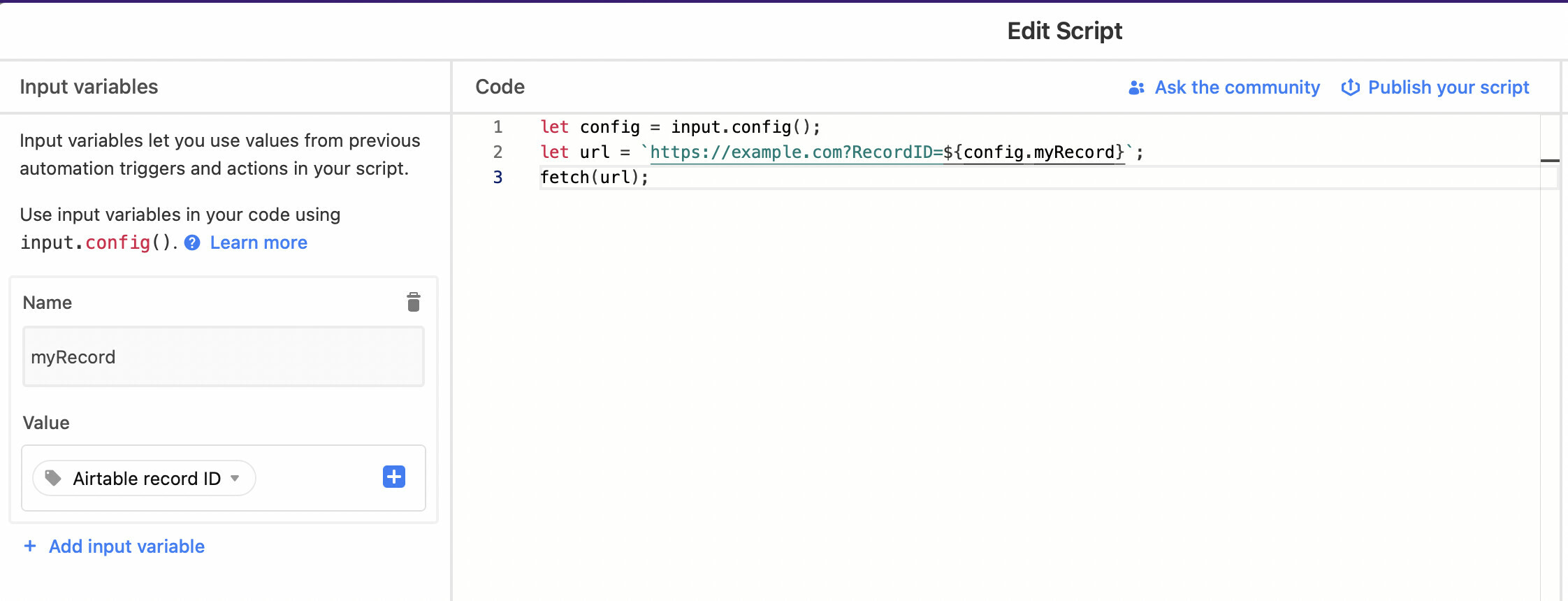

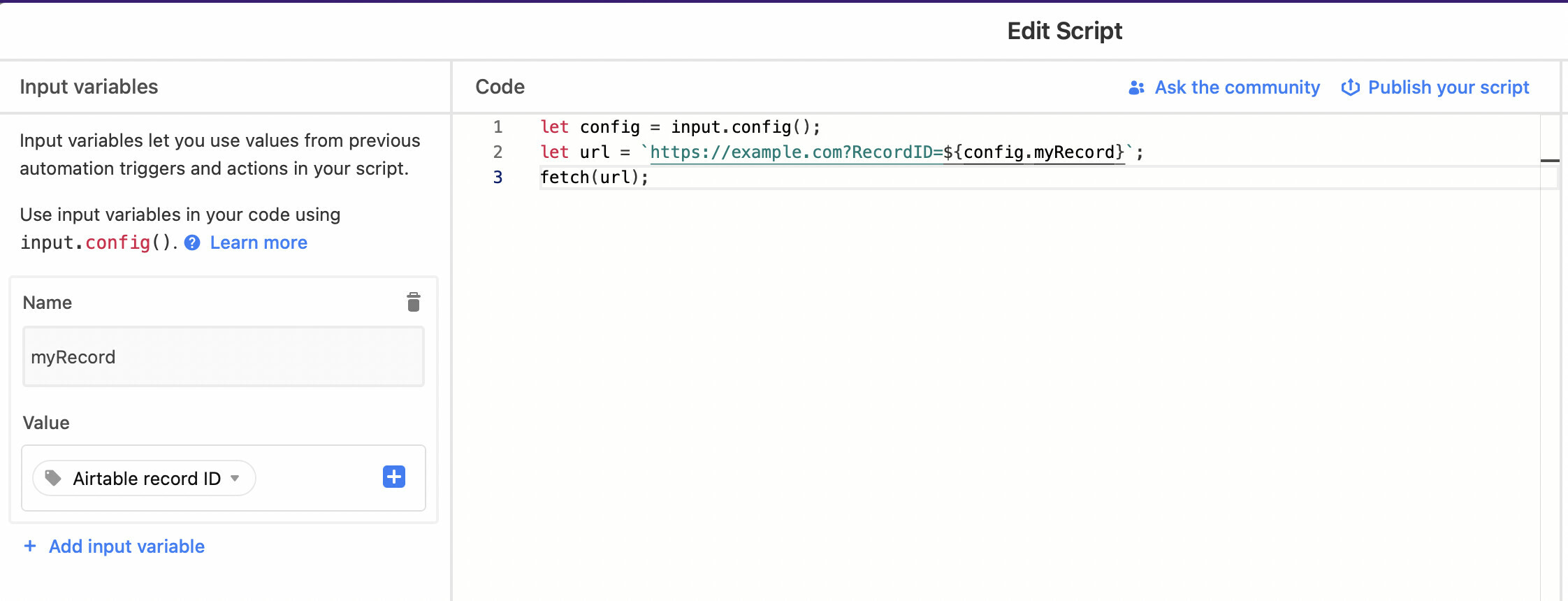

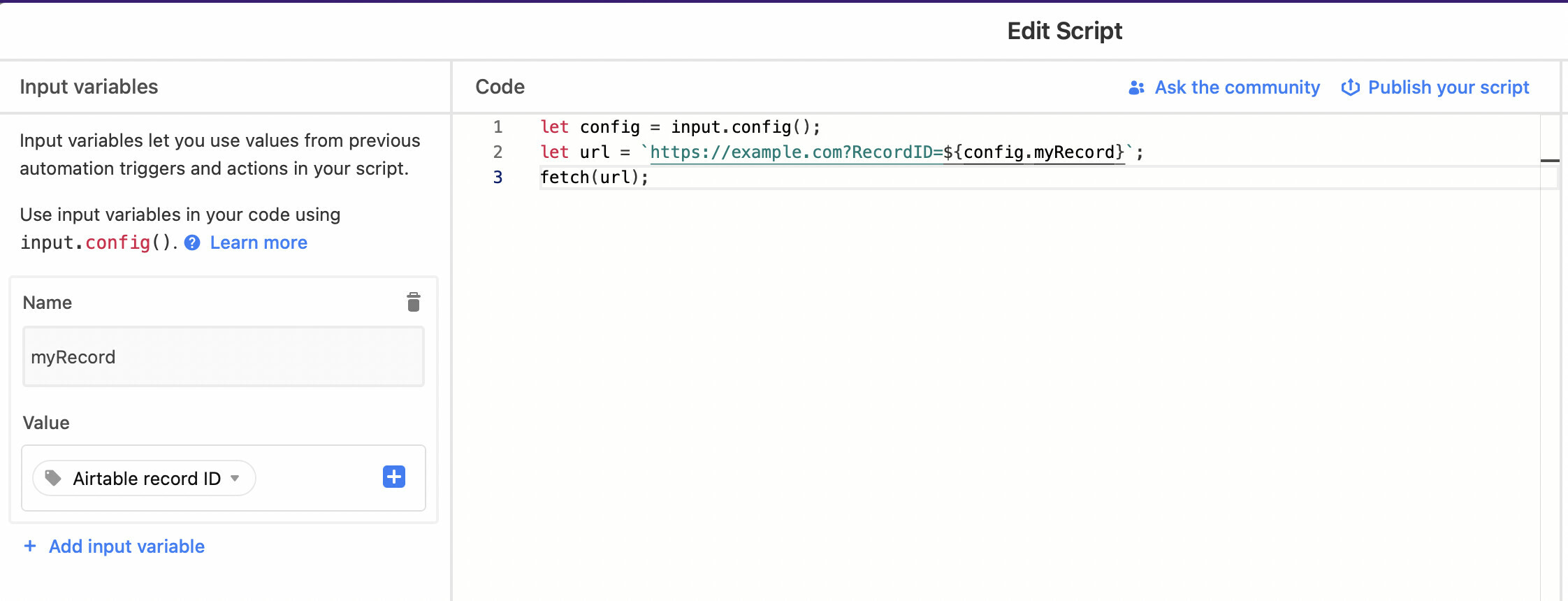

This is how you would send just the Record ID on its own to a webhook in Make:

let config = input.config();

let url = `https://hook.us1.make.com/xxxxxxxxxxxxxxxxxxxxx?RecordID=${config.myRecord}`;

fetch(url);

- Brainy

- November 6, 2022

Hi @Tobias108 ,

You can see my video about how to use send data to webhook with script and process it further with Make here:

I usually find it faster to just send record ID to Make and pickup all other data from the record there, rather than attach all needed data to webhook URL with query (like …&datapoint=this&anotherdatapoint=that)

- Inspiring

- November 7, 2022

Hi @Tobias108 ,

You can see my video about how to use send data to webhook with script and process it further with Make here:

I usually find it faster to just send record ID to Make and pickup all other data from the record there, rather than attach all needed data to webhook URL with query (like …&datapoint=this&anotherdatapoint=that)

In almost every case, this is a fine strategy. In certain edge cases, however, this will create some issues.

Imagine you have a record that changes, and at the instant it was changed, you need another system to rely specifically on that record’s values at that point in time. If you post the record ID to the endpoint, the next API call (or other request) to Airtable will be somewhat latent; it won’t be instant and may have just enough latency to allow other edits of the same record to sneak in. If that matters - and it seems to matter in more cases than you may think - you may have to POST the data payload with the outbound webhook event.

There are other issues to ponder as well. For every webhook call that triggers another inbound request, these are the things that occur:

- Your Airtable instance is busier servicing API and/or Make requests that could be avoided altogether with a webhook POST approach. Few realize that API traffic (including the glue-factories like Make and Zapier) directly affects user performance in the Airtable UI.

- Your security people will frown when they realize the Airtable API key must be shared and utilized outside your business domain.

- You have doubled the attack surfaces, thus raising the security risk envelope.

- You have tripped the communications tasks and traffic if your webhook listener uses the API to fetch the rest of the data (POST → FETCH → RESP); quadrupled if you use Make (POST → FETCH → RESP->POST).

- Your solution is now two or three times as complex (more things to maintain and more things to break).

Just sayin’ …

- Brainy

- November 7, 2022

2023 Update:

This thread explains the solution in detail, using Make as the example app for receiving the webhook.

There can be a bit of a learning curve with Make, which is why I created this basic navigation video for Make, along with providing the link to Make’s free training courses. There are also many Make experts hanging out there who can answer other Make questions & webhook questions.

————————-

OLD ANSWER FROM 2022:

If you want it to happen automatically, you would need to setup an automation that runs a script. Check out the thread here:

Your Expense Record field is an array (not a string) because it is a linked record field. The easiest & best thing to do would probably be to just send the RecordID on its own to Make’s webhook, and then use the Airtable - Get A Record module to pull in all the rest of the values from your record, including any array fields like your linked record field. This is how you would send just the Record ID to a webhook in Make: let config = input.config(); let url = `https://hook.us1.make.com/xxxxxx…

If you need to send multiple pieces of data to your webhook, that thread shows you how to write the script for that.

However, as also mentioned in that thread, if you’re using an integration tool like Make’s webhooks, the simplest & easiest & best thing to do would be to just send the RecordID on its own to Make’s webhook, and then use the Airtable - Get A Record module to pull in all the rest of the field values from your record, including any linked record fields, attachment fields, etc. Then you would have full access to all of your field values.

This is how you would send just the Record ID on its own to a webhook in Make:

let config = input.config();

let url = `https://hook.us1.make.com/xxxxxxxxxxxxxxxxxxxxx?RecordID=${config.myRecord}`;

fetch(url);

“Simpler”, “easier”, “faster”, and “better” for whom? The end user or the developer?

Both techniques (sending all the appropriate data versus only the record id) are equally simple and easy for the end user. The end user doesn’t do anything different.

As @Bill.French pointed out, this technique of sending only the record ID and having Make query for the record data is slower for the end user and other users of the base. It also increases network traffic because Make fetches data for all fields in the record, not just the fields needed.

Thus I conclude that the technique of sending only the record ID is primarily “easier”, and “faster” for the developer, especially if the developer knows Make, but not JavaScript. Using the tool you know is always easier and faster than learning a tool that you do not yet know.

Here are some ways that having Make get the record data is simpler for the Make developer:

- the developer does not need to url encode data for a GET request

- the developer does not need to be concerned with long data that might exceed url length limits when making a GET request

- the webhook works the same whether it is triggered from a button field, scripting extension, or automation script

- when the Make scenario needs data from lots of fields, and the scenario must be triggered from an automation, field name changes can be less of a headache.

- the developer doesn’t need to know how to create a POST request with a body

On there other hand, here are some potential benefits of sending the record data with the original webhook (especially if you know JavaScript).

- less overall network traffic (fewer overall requests, and no transmitting unnecessary field data)

- fewer modules in the Make scenario, thus fewer Make operations

- JavaScript can gather data from multiple sources (e.g. triggering records and the child record) and send the data all at once

- when input fields change, the change can be done completely on the Airtable side without adjusting the Make scenario (e.g. the input field {cost} needs to be changed to {total cost})

- the Make scenario is not tied to a specific table in a specific base (so it is easier to switch from a sandbox base to a production base, or to restore from a snapshot)

Think about that last situation. Imagine that you have a complex base that uses multiple Make webhook scenarios. Something happens that trashes the base. You restore a snapshot. What would you rather do now:

- Reconfigure all the Make scenarios to point to the snapshot

- Reconstruct the original base to match the snapshot

- Just use the snapshot as your new base because the Make scenarios are not tied to a specific base and continue to work with the snapshot

Of course there are other reasons why you might not be able to continue with the snapshot (loss of record history, portals that are tied to a specific base, etc.). But in cases where it is possible, wouldn’t it be nice?

- Brainy

- November 7, 2022

In almost every case, this is a fine strategy. In certain edge cases, however, this will create some issues.

Imagine you have a record that changes, and at the instant it was changed, you need another system to rely specifically on that record’s values at that point in time. If you post the record ID to the endpoint, the next API call (or other request) to Airtable will be somewhat latent; it won’t be instant and may have just enough latency to allow other edits of the same record to sneak in. If that matters - and it seems to matter in more cases than you may think - you may have to POST the data payload with the outbound webhook event.

There are other issues to ponder as well. For every webhook call that triggers another inbound request, these are the things that occur:

- Your Airtable instance is busier servicing API and/or Make requests that could be avoided altogether with a webhook POST approach. Few realize that API traffic (including the glue-factories like Make and Zapier) directly affects user performance in the Airtable UI.

- Your security people will frown when they realize the Airtable API key must be shared and utilized outside your business domain.

- You have doubled the attack surfaces, thus raising the security risk envelope.

- You have tripped the communications tasks and traffic if your webhook listener uses the API to fetch the rest of the data (POST → FETCH → RESP); quadrupled if you use Make (POST → FETCH → RESP->POST).

- Your solution is now two or three times as complex (more things to maintain and more things to break).

Just sayin’ …

Hey @Bill.French!

Yes, thanks for pointing it out. Especially in the case when there are multiple automation runs, these can take quite a while to process, so a record requested 1 min later might be something very different indeed.

“glue-factories” - love it :joy: yes, the glue factories should be applied more to hobbyist RC plane type of applications rather than full cargo flights.

- Inspiring

- November 7, 2022

“Simpler”, “easier”, “faster”, and “better” for whom? The end user or the developer?

Both techniques (sending all the appropriate data versus only the record id) are equally simple and easy for the end user. The end user doesn’t do anything different.

As @Bill.French pointed out, this technique of sending only the record ID and having Make query for the record data is slower for the end user and other users of the base. It also increases network traffic because Make fetches data for all fields in the record, not just the fields needed.

Thus I conclude that the technique of sending only the record ID is primarily “easier”, and “faster” for the developer, especially if the developer knows Make, but not JavaScript. Using the tool you know is always easier and faster than learning a tool that you do not yet know.

Here are some ways that having Make get the record data is simpler for the Make developer:

- the developer does not need to url encode data for a GET request

- the developer does not need to be concerned with long data that might exceed url length limits when making a GET request

- the webhook works the same whether it is triggered from a button field, scripting extension, or automation script

- when the Make scenario needs data from lots of fields, and the scenario must be triggered from an automation, field name changes can be less of a headache.

- the developer doesn’t need to know how to create a POST request with a body

On there other hand, here are some potential benefits of sending the record data with the original webhook (especially if you know JavaScript).

- less overall network traffic (fewer overall requests, and no transmitting unnecessary field data)

- fewer modules in the Make scenario, thus fewer Make operations

- JavaScript can gather data from multiple sources (e.g. triggering records and the child record) and send the data all at once

- when input fields change, the change can be done completely on the Airtable side without adjusting the Make scenario (e.g. the input field {cost} needs to be changed to {total cost})

- the Make scenario is not tied to a specific table in a specific base (so it is easier to switch from a sandbox base to a production base, or to restore from a snapshot)

Think about that last situation. Imagine that you have a complex base that uses multiple Make webhook scenarios. Something happens that trashes the base. You restore a snapshot. What would you rather do now:

- Reconfigure all the Make scenarios to point to the snapshot

- Reconstruct the original base to match the snapshot

- Just use the snapshot as your new base because the Make scenarios are not tied to a specific base and continue to work with the snapshot

Of course there are other reasons why you might not be able to continue with the snapshot (loss of record history, portals that are tied to a specific base, etc.). But in cases where it is possible, wouldn’t it be nice?

But should. :winking_face:

Huge advantage

Job security.

Opinion…

As y’all are aware, I’m deeply biased against glue-factory systems like Make. But, @kuovonne’s points are compelling enough to ask -

Should the term “expert” be applied to #no-code integrations?

There’s no question that one can get a lot done quickly at a fraction of the cost with Make. That ability seems to have a place at least at the innovation funnel for many organizations. But, should it be pervasively dependent deep inside the [production] architecture?

For the client’s benefit, experts are good at keeping them out of harm’s way. I’m not sure #no-code integrations do that. There’s a brightly-colored magenta elephant in this room, but I don’t think we can see him because the walls are magenta as well.

- Inspiring

- November 7, 2022

Hey @Bill.French!

Yes, thanks for pointing it out. Especially in the case when there are multiple automation runs, these can take quite a while to process, so a record requested 1 min later might be something very different indeed.

“glue-factories” - love it :joy: yes, the glue factories should be applied more to hobbyist RC plane type of applications rather than full cargo flights.

Good metaphor. I used glue to build planes before there were tiny little engines for them. It was my first encounter with the term “sparingly apply …”.

- Brainy

- November 7, 2022

hey @Tobias108 ,

Following the discussion here is a code alternative that sends all the data with the request:

const {recordId} = input.config()

const imageTable = base.getTable("Image generation")

const url = "https://hook.com/"

//const fieldsToSend = ["Prompt","Number of images to generate","Status","Images"]

const fieldsToSend = imageTable.fields.map(({name})=> name);

const record = await imageTable.selectRecordAsync(recordId)

const recordData = Object.fromEntries(fieldsToSend.map(key => [key,record?.getCellValue(key)]))

await fetch(url,{

method: "POST",

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({...recordData,recordId})

//alternatively you can follow Airtable API response convention

//body: JSON.string({id: recordId, fields:recordData})

})

@kuovonne pointed out ( :pray: ) it actually makes more sense to POST the data as the GET query parameters have limitation. Typing the fields names by hand especially without autocomplete is bit hard on me so decided to push all fields… obviously security/data size implications.

I hope it helps!

- Brainy

- November 7, 2022

hey @Tobias108 ,

Following the discussion here is a code alternative that sends all the data with the request:

const {recordId} = input.config()

const imageTable = base.getTable("Image generation")

const url = "https://hook.com/"

//const fieldsToSend = ["Prompt","Number of images to generate","Status","Images"]

const fieldsToSend = imageTable.fields.map(({name})=> name);

const record = await imageTable.selectRecordAsync(recordId)

const recordData = Object.fromEntries(fieldsToSend.map(key => [key,record?.getCellValue(key)]))

await fetch(url,{

method: "POST",

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({...recordData,recordId})

//alternatively you can follow Airtable API response convention

//body: JSON.string({id: recordId, fields:recordData})

})

@kuovonne pointed out ( :pray: ) it actually makes more sense to POST the data as the GET query parameters have limitation. Typing the fields names by hand especially without autocomplete is bit hard on me so decided to push all fields… obviously security/data size implications.

I hope it helps!

Thanks for sharing. Now, for a really sharable script, I recommend rewriting it so that you do not need to hardcode the table name.

Yeah, typing out all field names is a bit annoying and also a maintenance issue. When sharing a generic script it is much easier to just send all the field data. However, for actual production use, I recommend only sending the necessary data, and in the shape desired by the webhook. For example, for attachments, you usually only need to send the url and possibly the filename. For single selects, you are probably better off sending the value as a string versus the cell read value of an object.

- Brainy

- November 7, 2022

But should. :winking_face:

Huge advantage

Job security.

Opinion…

As y’all are aware, I’m deeply biased against glue-factory systems like Make. But, @kuovonne’s points are compelling enough to ask -

Should the term “expert” be applied to #no-code integrations?

There’s no question that one can get a lot done quickly at a fraction of the cost with Make. That ability seems to have a place at least at the innovation funnel for many organizations. But, should it be pervasively dependent deep inside the [production] architecture?

For the client’s benefit, experts are good at keeping them out of harm’s way. I’m not sure #no-code integrations do that. There’s a brightly-colored magenta elephant in this room, but I don’t think we can see him because the walls are magenta as well.

Yes.

Some “no-code” integrations have the complexity of code and require expertise to build, understand, and maintain. I am in the minority, but I consider these complex systems to be code–they just don’t have to deal with as many spelling, grammar, and authentication issues as traditional code. And that is a good thing, because when you don’t have to worry about low-level things, you can focus on the actual logic at hand.

On the other hand, there are many levels of expertise, and no clearly accepted cross-platform accreditation system for who gets to call themselves experts. So just because someone calls herself an expert doesn’t mean very much.

- Inspiring

- November 8, 2022

2023 Update:

This thread explains the solution in detail, using Make as the example app for receiving the webhook.

There can be a bit of a learning curve with Make, which is why I created this basic navigation video for Make, along with providing the link to Make’s free training courses. There are also many Make experts hanging out there who can answer other Make questions & webhook questions.

————————-

OLD ANSWER FROM 2022:

If you want it to happen automatically, you would need to setup an automation that runs a script. Check out the thread here:

Your Expense Record field is an array (not a string) because it is a linked record field. The easiest & best thing to do would probably be to just send the RecordID on its own to Make’s webhook, and then use the Airtable - Get A Record module to pull in all the rest of the values from your record, including any array fields like your linked record field. This is how you would send just the Record ID to a webhook in Make: let config = input.config(); let url = `https://hook.us1.make.com/xxxxxx…

If you need to send multiple pieces of data to your webhook, that thread shows you how to write the script for that.

However, as also mentioned in that thread, if you’re using an integration tool like Make’s webhooks, the simplest & easiest & best thing to do would be to just send the RecordID on its own to Make’s webhook, and then use the Airtable - Get A Record module to pull in all the rest of the field values from your record, including any linked record fields, attachment fields, etc. Then you would have full access to all of your field values.

This is how you would send just the Record ID on its own to a webhook in Make:

let config = input.config();

let url = `https://hook.us1.make.com/xxxxxxxxxxxxxxxxxxxxx?RecordID=${config.myRecord}`;

fetch(url);

Is let config = input.config(); an accurate code? I have seen this in multiple threads and tried it but the scripting extension is indicating there is an error in it and won’t let me run the script.

- Brainy

- November 8, 2022

Is let config = input.config(); an accurate code? I have seen this in multiple threads and tried it but the scripting extension is indicating there is an error in it and won’t let me run the script.

It is valid code for an automation script. It is not valid in Scripting Extension.

- Inspiring

- November 8, 2022

It is valid code for an automation script. It is not valid in Scripting Extension.

Thanks @kuovonne. I was literally just reading Airtable dcumentation describing exactly this.

Much appreciated. :slightly_smiling_face:

+1

+1- New Participant

- October 4, 2023

Hi @Tobias108 ,

You can see my video about how to use send data to webhook with script and process it further with Make here:

I usually find it faster to just send record ID to Make and pickup all other data from the record there, rather than attach all needed data to webhook URL with query (like …&datapoint=this&anotherdatapoint=that)

HI @Greg_F

I've followed your cideo, as i'va an issue getting the Record ID in MAke. Com. Unfortunately, i still have the same issue.

I've attached image from make.com.

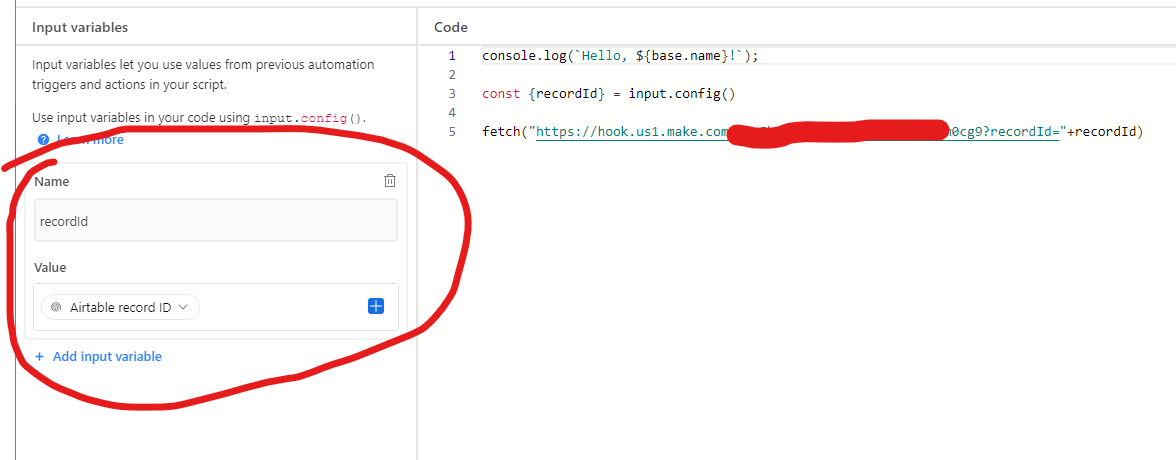

Here is my script on airtbale :

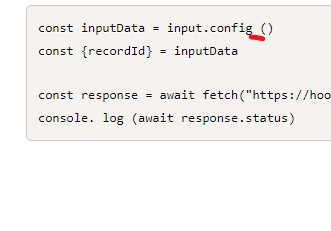

const inputData = input.config ()

const {recordId} = inputData

const response = await fetch("https://hook.eu2.make.com/xxx?recordId="+recordId)

console. log (await response.status)

- Brainy

- October 4, 2023

HI @Greg_F

I've followed your cideo, as i'va an issue getting the Record ID in MAke. Com. Unfortunately, i still have the same issue.

I've attached image from make.com.

Here is my script on airtbale :

const inputData = input.config ()

const {recordId} = inputData

const response = await fetch("https://hook.eu2.make.com/xxx?recordId="+recordId)

console. log (await response.status)

@cg11 Make sure you have that you have input variables defined on the left side:

Also in your example there seem to be a strange space after config and between ()

+1

+1- New Participant

- October 4, 2023

@Greg_FMany thanks, I've found another issue :

recordId="+

The "=". I've removed it and now the record Id appears in make.

Many thanks for your help.

I have the same issue in airtable though, i had before and thought it came from the record id missing.

I have x lines, in airtable with data.

With the following columns :

- Mot clé (keyword)

- Url article (post url)

- Status

- Structure Chatgpt

And i want make to send the keyword to a prompt in chatgpt, then put the result of the prompt on the field "Structure Chatgpt" , from the same line of the keyword.

My trigger is when an entry meet the following conditions :

Status = to do AND field "structure chat gtp" must be empty.Each time i run Make.com, it put the result in the same field. Not the one corresponding to the line of the keyword. i've attached a capture (airtable structure).

Any ideas on how to solve this issue ?

Many thanks in advance

CG

- Participating Frequently

- November 6, 2024

Hey everyone, Hannes from miniExtensions here.

I just wanted to add that the miniExtensions form has some really powerful features regarding webhooks! You can trigger webhooks with each form submission, or only in certain cases, based on the data in the record. You can also base the webhook off of an Airtable field, so you can simply use a formula field to generate the correct webhook URL, which is very straightforward and much easier to do than the scripts described in this thread. Of course this would be used if you/your users update records using the miniExtensions form. If you need to update records in Airtable directly and have those updates trigger webhooks, you'd still need the solutions outlined earlier in this thread.

I'd be happy to explain how to set up the formula. Just drop me a message or reply to this thread!

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.

Scanning file for viruses.

Sorry, we're still checking this file's contents to make sure it's safe to download. Please try again in a few minutes.

OKThis file cannot be downloaded

Sorry, our virus scanner detected that this file isn't safe to download.

OK