Hmm, what issue did you face when making the automation? I think I'd set it up as:

1. "Update record" action to link the Table A record to the Table B record

2. "Find record" action to find the record that was just created in Table B

3. "Update record" action to paste the values from the lookup fields into the text fields in Table B

Hmm, what issue did you face when making the automation? I think I'd set it up as:

1. "Update record" action to link the Table A record to the Table B record

2. "Find record" action to find the record that was just created in Table B

3. "Update record" action to paste the values from the lookup fields into the text fields in Table B

I have a problem with the record ID. I can’t manage to find or link the proper record ID from the second table in the update record automation.

I have a problem with the record ID. I can’t manage to find or link the proper record ID from the second table in the update record automation.

If you provide a read-only invite link to a duplicated copy of your base I can troubleshoot this for you!

If you provide a read-only invite link to a duplicated copy of your base I can troubleshoot this for you!

Hi Adam

Thank you for your response allready.

Below you can find the read only link.

https://airtable.com/invite/l?inviteId=invQxYhMKtpVKRIle&inviteToken=beaef4c10e4531d5e2dc5a581912d0264fd78bca5c002ed8c81b6622e9fb99b3&utm_medium=email&utm_source=product_team&utm_content=transactional-alerts

I tried to explain in the base per record what the steps are.

Best regards Michiel

Hi Adam

Thank you for your response allready.

Below you can find the read only link.

https://airtable.com/invite/l?inviteId=invQxYhMKtpVKRIle&inviteToken=beaef4c10e4531d5e2dc5a581912d0264fd78bca5c002ed8c81b6622e9fb99b3&utm_medium=email&utm_source=product_team&utm_content=transactional-alerts

I tried to explain in the base per record what the steps are.

Best regards Michiel

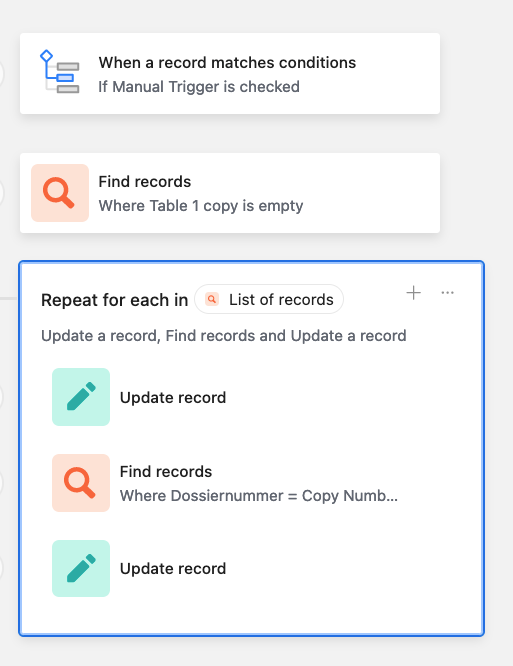

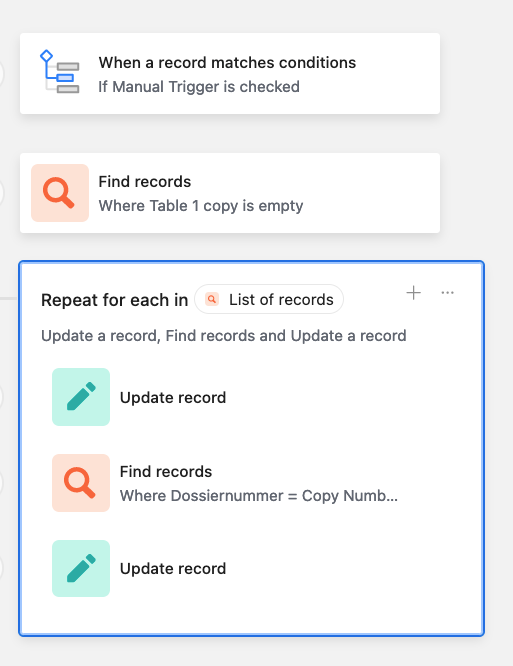

Thanks for the link! I set up the automation like so:

1. "Update record" action to link the Table A record to the Table B record

2. "Find record" action to find the record that was just created in Table B

3. "Update record" action to paste the values from the lookup fields into the text fields in Table B

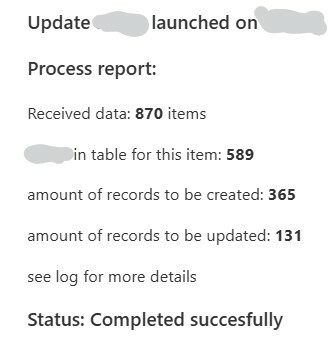

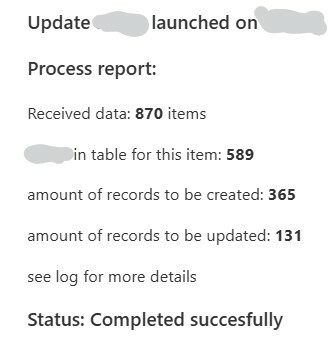

And it now works as expected:

Link to base

Thanks for the link! I set up the automation like so:

1. "Update record" action to link the Table A record to the Table B record

2. "Find record" action to find the record that was just created in Table B

3. "Update record" action to paste the values from the lookup fields into the text fields in Table B

And it now works as expected:

Link to base

Hi Adam.

You make it look so easy 🙂

Do you want to share the base with me so i could my way back to how you designed this?

The base you shared is something differently without any automation in it.

Best regards, Michiel

Hi Adam.

You make it look so easy 🙂

Do you want to share the base with me so i could my way back to how you designed this?

The base you shared is something differently without any automation in it.

Best regards, Michiel

Ah, sorry! I've updated the link and it now goes to the correct base

Hi,

I would use scripting. No matter how much records you have, all in-memory operations will be completed in less than a second. The 'bottleneck' is table create/update operations. How much records expected to be in Table B, to be updated/created?

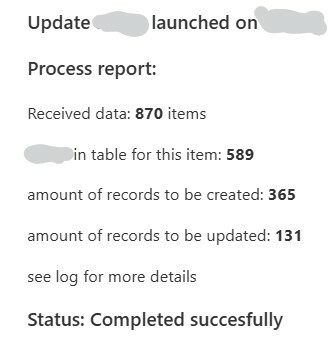

For example, this one completed in 18 seconds

const {rec_id,item,command,name}=input.config()

const source=base.getTable('T1')

const writeTo=base.getTable('T2')

//Fetch data

const url = command

const options = {

method: "GET",

headers: {'Cache-control':'no-cache', Authorization: O } };

const fetchdata=await fetch(url, options).then(request => request.json())

const loaded=fetchdata['result']

console.log(fetchdata)

//Process data

const unpack=(el,{date,status,price,...rest}=el)=>({item,name,date,status,price})

const unwrap=obj=>unpack(obj)

const newdata=loaded.map(unwrap)

//Load existing data

const itemdate=r=>r.getCellValue('item')+r.getCellValue('date')

const query=await writeTo.selectRecordsAsync({fields:writeTo.fields})

const filtered=query.records.filter(r=>r.name===name)

const existed=new Map(filtered.map(r=>[itemdate(r),r.id]))

//Functions to compare, update and create

const create=(el)=>({fields:el})

const update=({item,date,...rest})=>({id:existed.get(item+date)||'',fields:rest})

const compare=['name','status','price']

const otherdata=r=>compare.map(c=>r.getCellValueAsString(c)).join(',')

const databyid=id=>otherdata(query.getRecord(id))

const upd=[];const crt=[]

//Distribute - records with new item+data to create, existing - update. Exclude if there is nothing to update

const needSave=({item,date,...rest})=>databyid(existed.get(item+date))!=Object.values(rest).join(',')

newdata.forEach(j=>{ existed.has(j.item+j.date)? upd.push(j) : crt.push(create(j)) })

const tosave=upd.filter(needSave).map(update)

//Write to table

while (tosave.length) await writeTo.updateRecordsAsync(tosave.splice(0,50))

while (crt.length) await writeTo.createRecordsAsync(crt.splice(0,50))

output.set('status','Completed succesfully')

It's not ready-to-work, I wiped 3 lines with auth and edited some sensitive data , but most of it can be used as example to build yours.

Note: all my data is text. You have single select field, which can't be just written in the same form as read

you can pass value through function

const writeSngSel_orNameItAsYouWish=({name,...rest})=>({name})

or use {name: value.name} as value to write

or just turn second table field to text and write the result of getCellValueAsString()

Ah, sorry! I've updated the link and it now goes to the correct base

Hi Adam

Thanks for sharing. Because of this i now understand the find records automation better. But sadly it still triggers record per record. So if i import 2000 records daily i'm through my automations on less then 15 days.

Still, thanks for the support.

Hi,

I would use scripting. No matter how much records you have, all in-memory operations will be completed in less than a second. The 'bottleneck' is table create/update operations. How much records expected to be in Table B, to be updated/created?

For example, this one completed in 18 seconds

const {rec_id,item,command,name}=input.config()

const source=base.getTable('T1')

const writeTo=base.getTable('T2')

//Fetch data

const url = command

const options = {

method: "GET",

headers: {'Cache-control':'no-cache', Authorization: O } };

const fetchdata=await fetch(url, options).then(request => request.json())

const loaded=fetchdata['result']

console.log(fetchdata)

//Process data

const unpack=(el,{date,status,price,...rest}=el)=>({item,name,date,status,price})

const unwrap=obj=>unpack(obj)

const newdata=loaded.map(unwrap)

//Load existing data

const itemdate=r=>r.getCellValue('item')+r.getCellValue('date')

const query=await writeTo.selectRecordsAsync({fields:writeTo.fields})

const filtered=query.records.filter(r=>r.name===name)

const existed=new Map(filtered.map(r=>[itemdate(r),r.id]))

//Functions to compare, update and create

const create=(el)=>({fields:el})

const update=({item,date,...rest})=>({id:existed.get(item+date)||'',fields:rest})

const compare=['name','status','price']

const otherdata=r=>compare.map(c=>r.getCellValueAsString(c)).join(',')

const databyid=id=>otherdata(query.getRecord(id))

const upd=[];const crt=[]

//Distribute - records with new item+data to create, existing - update. Exclude if there is nothing to update

const needSave=({item,date,...rest})=>databyid(existed.get(item+date))!=Object.values(rest).join(',')

newdata.forEach(j=>{ existed.has(j.item+j.date)? upd.push(j) : crt.push(create(j)) })

const tosave=upd.filter(needSave).map(update)

//Write to table

while (tosave.length) await writeTo.updateRecordsAsync(tosave.splice(0,50))

while (crt.length) await writeTo.createRecordsAsync(crt.splice(0,50))

output.set('status','Completed succesfully')

It's not ready-to-work, I wiped 3 lines with auth and edited some sensitive data , but most of it can be used as example to build yours.

Note: all my data is text. You have single select field, which can't be just written in the same form as read

you can pass value through function

const writeSngSel_orNameItAsYouWish=({name,...rest})=>({name})

or use {name: value.name} as value to write

or just turn second table field to text and write the result of getCellValueAsString()

Hi Alexey, your right i think. And we encountered the bottleneck in the update and comparisson as well. It's expected to be between 1000 and 5000. But i only manage to let our script take 50 records per time, create and compare and then delete the records in Table A (so the next import there won't be double values in table A) (It's not only 1 column but approx. 60 columns per record)

But i think i found a work around.

I think i'll run the first automation to link records Table A to Table B.

Another script that, when triggered, will copy the notes from the lookup field in Table B to a "writeable" field. I noticed that when the value remains the same before and after the update my watch fields are not triggered.

Third script to batch delete all records in table A.

Maybe this will be much quicker then to do a real search, check and update.

Any thoughts on this approach? I'm just thinking about what kind of triggers i could use.

Best regards, Michiel

Hi Adam

Thanks for sharing. Because of this i now understand the find records automation better. But sadly it still triggers record per record. So if i import 2000 records daily i'm through my automations on less then 15 days.

Still, thanks for the support.

Nice! Now that you've managed to combine the automation into a single one, you could attempt to reduce the runs by setting it up like this:

The "Find Records" action can only do up to 1000 records though, and you're doing min 2000. When facing this issue I end up creating a "Task" table where each record represents 8000 records as the Repeating Group function handles up to 8000 items. I'd use a script action that grabs all the relevant records and creates one record per 8000 records found, and have another automation that would trigger once per record created in that table, resulting in 2 automation runs for 8k records, 3 automation runs for 16k records etc

Uses slightly more automation runs than fully scripting it, but I like it because I feel like it's easier to manage; all very subjective though!

Hi Alexey, your right i think. And we encountered the bottleneck in the update and comparisson as well. It's expected to be between 1000 and 5000. But i only manage to let our script take 50 records per time, create and compare and then delete the records in Table A (so the next import there won't be double values in table A) (It's not only 1 column but approx. 60 columns per record)

But i think i found a work around.

I think i'll run the first automation to link records Table A to Table B.

Another script that, when triggered, will copy the notes from the lookup field in Table B to a "writeable" field. I noticed that when the value remains the same before and after the update my watch fields are not triggered.

Third script to batch delete all records in table A.

Maybe this will be much quicker then to do a real search, check and update.

Any thoughts on this approach? I'm just thinking about what kind of triggers i could use.

Best regards, Michiel

That approach looks reasonable. Instead of triggered by record, you can schedule automation to run at a scheduled time and take 1000 records with each run.

You should understand that data visible as lookup is just a reflection of data from other table. So, after you delete records from table A, their lookup in table B became empty.