The SimpleScraper (go to simplescraper dot io) Chrome extension looks super powerful and useful for sucking up data into Airtable!

@chrismessina - Hey Chris, a quick update on this:

We’ve been working on a custom block that allows you to easily import data into Airtable using Simplescraper. Here’s a preview of it in action:

In the demo we import data from Stackoverflow but the source could be any website that you choose - simply use the dropdown to change recipes.

Let me know if this is similar to what you had in mind?

Airtable’s custom blocks are still in preview so no ETA but wanted to keep you posted and listen to any suggestions that you may have.

Peace, Mike

Hello! Is the project dead?

Looks like import.io was put on ice for new users so no new signups for free tier accounts, just the sales pitch available :winking_face: so I am hoping too this project makes some progress. Any news @Mike_ss ?

The one issue with Simplescraper is it doesn’t look for text in the page based on some logic, but rather it looks at the structure of the page. For example, there can be a page 1 with text as follows

AAA: XXXX

BBB: YYYYY

CCC: ZZZZZZ

Where AAA, BBB, CCC are my titles of fields.

Page 1 is crawled correctly.

The issue is if some of the elements of the page are missing.

If there is another page 2 (same site essentially) and it has same structure but the element AAA: XXXX is missing (not listed on the page). Because the way Simplescraper works, when it crawls the page, it will put the results:

AAAA: YYYY

BBBB: ZZZZ

CCCC: (will be empty because now the data got shifted to AAAA and BBBB.

Simplescraper should have looked at the page structure but also look for similar blocks in text. If I create recipe where AAAA, BBBB, and CCCC are there, and if AAAA is missing, then it should still be able to fill in BBBB and CCCC correctly and not move things up.

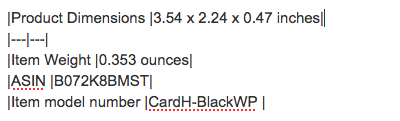

The specific page is Amazon product page.

The specific issue is caused by the product details element for “Discontinued by manufacturer” property. I am not interested in this one. Just want to start the scrape at Product Dimensions.

- Is Discontinued By Manufacturer : No

I can solve is by having 2 different recipes.

I know this is not Simplescraper support forum, but since I am doing it into Airtable I wanted to mention in case @Mike_ss happens to be around reading it.

Other than that it works well. Is an integration necessary to Airtable, what would be the benefit of Airtable buying it? I can use Zapier or Integromat to process the results.

Anyhow, earlier there was discussion local vs cloud scrape. In the simplesraper FAQ:

What’s the difference between local scraping and cloud scraping?

Using the extension to select and download data is local scraping. It’s simple and free. If you scrape the same pages often, need to scrape multiple pages, or want to turn a website into an API, you can create a scrape recipe that runs in the cloud. Cloud scraping has advantages like speed, page navigation, a history of scrape results, scheduling and the ability to run multiple recipes simultaneously.

@Air_Table3, @chrismessina, @Nicolas_Lapierre, @Bill.French, @itoldusoandso

Hey, please excuse the shortage of updates on this. I progressed further down the block (now App) path before realizing that a direct integration would be a smoother and more user-friendly solution.

So I’ve built the integration and it’s now live at Simplescraper.

Enter your Airtable info (API key, Base ID, table name) and then any website data that you scrape will instantly appear in Airtable. Here’s the tutorial and below is a video of scraping jobs from Indeed.com into Airtable with Simplescraper.

Give it a try and hope it proves useful. Happy to answer questions and take suggestions.

@Air_Table3, @chrismessina, @Nicolas_Lapierre, @Bill.French, @itoldusoandso

Hey, please excuse the shortage of updates on this. I progressed further down the block (now App) path before realizing that a direct integration would be a smoother and more user-friendly solution.

So I’ve built the integration and it’s now live at Simplescraper.

Enter your Airtable info (API key, Base ID, table name) and then any website data that you scrape will instantly appear in Airtable. Here’s the tutorial and below is a video of scraping jobs from Indeed.com into Airtable with Simplescraper.

Give it a try and hope it proves useful. Happy to answer questions and take suggestions.

Yep, we will take a look, very helpful for other sites …

It gets knocked out though by Amazon site changing structure unfortunately.

There were some limitations of SimpleScraper as I described earlier however for me so I ended up going with the Airtable clipper tool (more manual and requires lots of fields).

Thanks for the update.

Yep, we will take a look, very helpful for other sites …

It gets knocked out though by Amazon site changing structure unfortunately.

There were some limitations of SimpleScraper as I described earlier however for me so I ended up going with the Airtable clipper tool (more manual and requires lots of fields).

Thanks for the update.

Funny you should mention this - about a decade ago I created a eserver-side] javascript library that dynamically alters the CSS and HTML structure in non-repeatable ways without changing the rendering making it impossible for scrapers to capture any data. I just licensed it to a prominent data sciences team to protect their data from automated scraping updates and encourage data consumers to go through the proper mechanisms for real-time updates.

Funny you should mention this - about a decade ago I created a [server-side] javascript library that dynamically alters the CSS and HTML structure in non-repeatable ways without changing the rendering making it impossible for scrapers to capture any data. I just licensed it to a prominent data sciences team to protect their data from automated scraping updates and encourage data consumers to go through the proper mechanisms for real-time updates.

Classic game of cat and mouse but that 10-year old cat is getting a close to retirement now :winking_face:

Classic game of cat and mouse but that 10-year old cat is getting a close to retirement now :winking_face:

LOL! I wish - I’ve updated it 11 times. I think I will retire before it does.

Classic game of cat and mouse but that 10-year old cat is getting a close to retirement now :winking_face:

But that’s the point - there’s no way to predict the next rendering change, the mouse is in the snare; end of game.

But that’s the point - there’s no way to predict the next rendering change, the mouse is in the snare; end of game.

The mouse is crossed now with Airtable and replicates through automation. Catch me if you can.

The mouse is crossed now with Airtable and replicates through automation. Catch me if you can.

The mouse is crossed now with Airtable and replicates through automation. Catch me if you can.

Plot twist: Airtable was a feline all along.

Not outright saying so is simply the more profitable option right now.

But if the scraping crowd ever truly starts migrating to Airtable en masse, I think they’d crack down on this use case way before our remoteFetchAsync queues started yielding error after error haha. I’m sure there are domains that get significant traffic from Airtable but most would probably just go for the outright CORS block, which would threaten the platform’s modularity, which is still a core part of its sales pitch, from what I can tell.

So, what’s that, ten, 12 lines of this month’s flavor of node middleware? In order for that Abagnale line to not even reach the target, that is? Not a rhetorical question btw, I just don’t know servers that well.

The mouse is crossed now with Airtable and replicates through automation. Catch me if you can.

Plot twist: Airtable was a feline all along.

Not outright saying so is simply the more profitable option right now.

But if the scraping crowd ever truly starts migrating to Airtable en masse, I think they’d crack down on this use case way before our remoteFetchAsync queues started yielding error after error haha. I’m sure there are domains that get significant traffic from Airtable but most would probably just go for the outright CORS block, which would threaten the platform’s modularity, which is still a core part of its sales pitch, from what I can tell.

So, what’s that, ten, 12 lines of this month’s flavor of node middleware? In order for that Abagnale line to not even reach the target, that is? Not a rhetorical question btw, I just don’t know servers that well.

Who would dare the issue a wide block when most of the uses are harmless. If it’s legal to scrap Linkedin data to analyze and predict employee job loyalty and Facebook photos for Face recognition for law enforcement agencies world-wide, without any punishment, what would be the measure to prevent Airtable to provide multipurpose tools from completely blocking it from accessing said websites. The chances that Airtable would sue them is limited but other individuals may. The information on Amazon and other sites is publicly available information. As long as it is only used to analyze and research the information, it’s probably unlikely. If there is a law prohibiting outright scraping, I would say that low will be in the first place regulating personal information of individuals. That is likely going to be illegal sooner or later. While Airtable scraper may get blocked, SimpleScraper would probably get by it by using different IP addresses. It’s not illegal.

Who would dare the issue a wide block when most of the uses are harmless. If it’s legal to scrap Linkedin data to analyze and predict employee job loyalty and Facebook photos for Face recognition for law enforcement agencies world-wide, without any punishment, what would be the measure to prevent Airtable to provide multipurpose tools from completely blocking it from accessing said websites. The chances that Airtable would sue them is limited but other individuals may. The information on Amazon and other sites is publicly available information. As long as it is only used to analyze and research the information, it’s probably unlikely. If there is a law prohibiting outright scraping, I would say that low will be in the first place regulating personal information of individuals. That is likely going to be illegal sooner or later. While Airtable scraper may get blocked, SimpleScraper would probably get by it by using different IP addresses. It’s not illegal.

This isn’t a question of legality or boldness. And if people are willing to pay for CSS class obfuscation solutions as mentioned above, they’re surely willing to block a productivity tool from accessing their servers.

Reply

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.