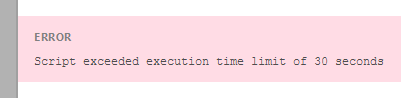

Today, I set aside some time to explore batch techniques for use with await table.updateRecordsAsync(), figuring out the most records that I could update with the 30 seconds allowed within an Automation Script.

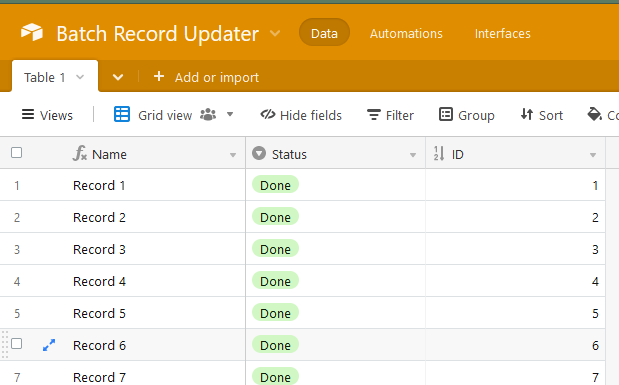

I wrote a script that updates a Status from “Todo” to “Done” in batches of 50 records at a time that updated approximately 7,000 records within the allowed 30 seconds - before the Automation timeout stopped my script.

The same script works as expected within a Scripting Extension App, and takes ~4 minutes for a table with 50,000 records.

Although my batch Automation script was my first attempt, and perhaps a little mediocre around the edges - functionally it worked without error - and from this I’m assuming that the most records anyone could hope to update within one Automation script is 7,000 records.

//Script can update approx 7,000 records before reaching the time out of 30 seconds within an Automation.

//Target Table

let table = base.getTable("Table 1");

let query = await table.selectRecordsAsync({fields: ["Name", "Status"], sorts: [ {field: "Name", direction :"asc"}]});

let records = query.records;

/*returns

const myTable = [

{ id: "rec4da1mVM153RKjc", name: "Record 1" },

{ id: "recypvNNLFf2sQ2Ct", name: "Record 2" },

{ id: "rec4UtiNA4ntT1eSg", name: "Record 3" },

{ id: "reciewbaoRBfo1DvM", name: "Record 4" } ... ]

*/

//Prepare base data with desired update

let myData = records.map( element => ({

id: element.id,

fields: {

"Status": { name: "Done"}

}

}));

/*formats returns

const myDataFormat = [

{ id: 'rec4da1mVM153RKjc', name: 'Record 1', fields: { Status: { name: 'Done' } } },

{ id: 'recypvNNLFf2sQ2Ct', name: 'Record 2', fields: { Status: { name: 'Done' } } },

... ]

*/

//Divide prepared base data into batches of 50 records

let myBatch = [];

let batchSize = 50;

let batchStart = 0;

let batchEnd = batchSize;

let totalIterations = Math.floor(myData.length / batchEnd);

//Process all 50 sized batches

for (let i = 0; i < totalIterations; i++) {

myBatch[i] = myData.slice(batchStart, batchEnd);

batchStart += batchSize;

batchEnd += batchSize;

}

//Process the last odd sized batch

myBatch[totalIterations] = myData.slice(

batchStart,

(myData.length % 50) + batchStart

);

//Stamp the batches with the updates into the table.

for (let i = 0; i < myBatch.length; i++) {

await table.updateRecordsAsync(myBatch[i]);

}

It’s comforting to know that this script will execute manually with the expected results via the Scripting App.

Keen for any pointers, tips or advice that anyone may have on this topic. I found that there was a couple of “gotcha” issues whilst writing, such as not being able to use await table.updateRecodsAsync within a forEach().

I’ll need to make time to read over these forums for batch techniques, as they’re they’re the kinda thing that when you actually need to know and employ them, you’ll at least want to have some theory understood and some practice before attempting to update 50,000 record base :grinning_face_with_sweat: .

Oh, and for the scripting app, due to the time it takes to execute, I used the below loop that keeps the user informed of where the process is at;

for (let i = 0; i < myBatch.length; i++) {

await table.updateRecordsAsync(myBatch[i]);

output.clear();

output.text(`Processing ${myBatch[i][0].name} of ${myData.length}`);

}

So yeah… That’s my Sunday Airtable adventure, I hope you liked it. The end. :open_book: